➤ How to Code a Game

➤ Array Programs in Java

➤ Java Inline Thread Creation

➤ Java Custom Exception

➤ Hibernate vs JDBC

➤ Object Relational Mapping

➤ Check Oracle DB Size

➤ Check Oracle DB Version

➤ Generation of Computers

➤ XML Pros & Cons

➤ Git Analytics & Its Uses

➤ Top Skills for Cloud Professional

➤ How to Hire Best Candidates

➤ Scrum Master Roles & Work

➤ CyberSecurity in Python

➤ Protect from Cyber-Attack

➤ Solve App Development Challenges

➤ Top Chrome Extensions for Twitch Users

➤ Mistakes That Can Ruin Your Test Metric Program

Apache Kafka Introduction | Apache Kafka is used to send continuous data between the producer and consumer using a mediator.

- It is also called an Advanced Message Queue Protocol supporting tool (AMQP).

- It is from Apache with Scala 2.13.

- It supports sending/receiving data between various systems.

- It is language-independent and even protocol-independent.

- It contains only topic Concepts. It does not support Queue. We can use the topic concept to send a message to one or more consumers. So, the queue concept is removed.

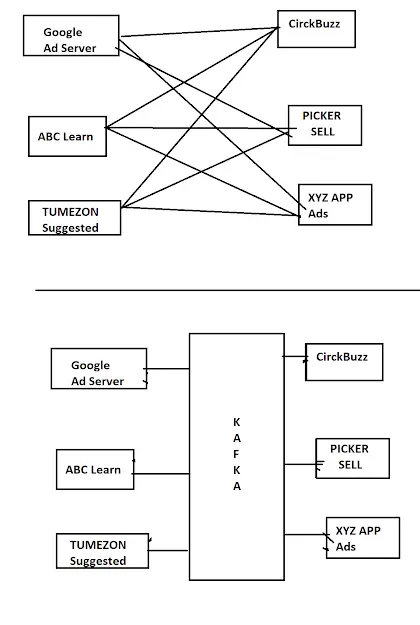

If multiple systems want to communicate with each other then there will be multiple permutations and combinations. Kafka helps here as a mediator.

Kafka Software Overview

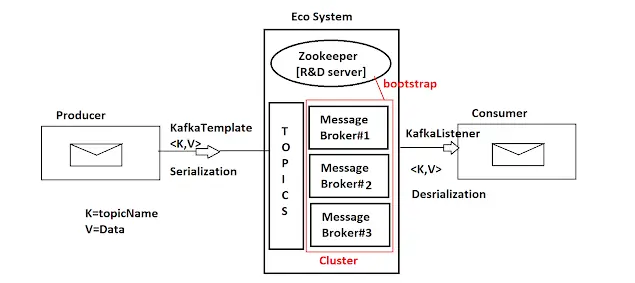

- The full software is called EcoSystem which contains Cluster, bootstrap Server, and Topic Section.

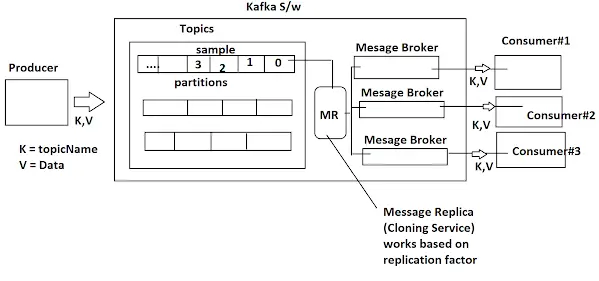

- One Message Broker is used to read data from the topic section, clone it, and send data to consumers based on the topic name. There will be multiple message brokers, if one goes down then another will be used.

- All message brokers and topic sections are fully controlled by an R&D Server Zookeeper i.e. creating topics, storing data, allocating Message brokers to consumers.. etc.

- The collection of message broker instances is called a Cluster. When we start Kafka Software it is created with one Instance. On-demand (number of consumers) Message Brokers are controlled by Zookeeper.

- The topic is memories which store data in partitions. Inputs: topicName, partition factor. Based on the replication factor number of consumers even identified. default is taken as 1 for both partition and replication.

Assume we have a producer application having some data. We transfer data to the Eco System (Kafka). The ecosystem stores data in topics in a key-value format where the key contains topicName and the value contains data. Data is transferred in the form of serialization and stored in partitions. If we don’t give any partition then one default partition is created with index zero. Once it is done, Zookeeper allocates one message broker to transfer data to the consumer. Every consumer will have one unique message broker (N consumer = N message broker). The data will be deserialized while transferring to the consumer. Message Broker asks for a copy of the message from the MR (message replica; cloning service) and that message will be transferred to the consumer.

Q) Kafka Full software is called as?

Eco System = Zookeeper + Topics + Cluster.

Q) What is a cluster? what is the default size on startup?

A cluster is a collection of Message brokers. A message broker is a mediator software that sends data to consumers. The default size on startup is one.

Q) What is the Topic Section? How will it store data?

It is a memory that holds data. It stores in the key-value format as topicName=Data using the Partitions concept. The index starts from zero. The default size is one.

Q) What is MR? When will it be executed?

The MR represents Message Replica, which creates cloning objects for actual data. It gets executed before sending data to the consumer.

- Serialization:- It is a process of converting Java Object to network understandable

- format. Object —-> Network Format, File System

- Deserialization:- Converting Response data(n/w data) into Java Object Format. N/w Format —> Object.

The producer can be a Java application, but the consumer may be a non-Java application. In that case, we should able to send/read data in Global Formats. Like String(plain text), JSON, XML .. etc

- Data is sent or received in K=V Format. Here K=TopicName, V=Data.

- Data is stored inside the Topic Section, every topic is identified using one name(topicName=Key).

- Topic data is stored as partitions (default 1). Every partition contains one index number called an ‘offset’.

- Partitions are created by Kafka software only. However, the number of Partitions must be provided by the programmer only while creating the topic.

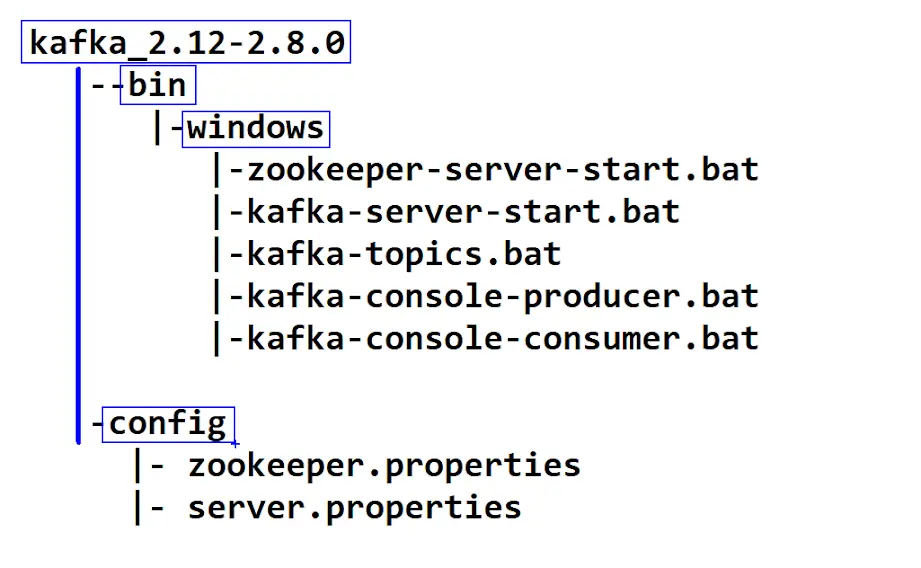

Apache Kafka Setup

- Download Apache Kafka by choosing binary Scala 2.13. [If getting the error “The input line is too long.” then try Kafka version 3.2.0.]

- Extract the downloaded file.

- Open the command prompt/terminal from that location.

Apache Kafka Commands

Run the below commands in the command prompt. Run each command in separate tabs/windows. These commands are specific to Windows OS. For Unix use the appropriate “.sh” file located outside of the “windows” folder.

- Zookeeper

.\bin\windows\zookeeper-server-start.bat .\config\zookeeper.properties- Kafka Server

.\bin\windows\kafka-server-start.bat .\config\server.properties- Create Topics [name, partitions, replication factor, to zookeeper]

.\bin\windows\kafka-topics.bat --create --bootstrap-server localhost:9092 --replication-factor 1 --partitions 1 --topic knowprogramoneTo list all the topics:-

.\bin\windows\kafka-topics.bat --list --bootstrap-server localhost:9092- Producer (Console-based)

.\bin\windows\kafka-console-producer.bat --bootstrap-server localhost:9092 --topic knowprogramoneEnter some messages:-

>hi

>hello

>{eid:10, ename:john, esal:2.2- Consumer (Console based)

.\bin\windows\kafka-console-consumer.bat --bootstrap-server localhost:9092 --topic knowprogramone If we want the message from the beginning:-

.\bin\windows\kafka-console-consumer.bat --bootstrap-server localhost:9092 --topic knowprogramone --from-beginningWe have used console-based producers and consumers just for demo purposes. We have to define one application for producer and consumer services.

To create a topic inputs are:-

- topicName: A Name given to the Topic(Key).

- replication factor: Number of copies to be created for given one message.

- partitions: Number of parts that need to be created for a given message.

- Zookeeper details: The Zookeeper creates a topic with given details.

- Zookeeper runs on port number 2181, check inside config/zookeeper.properties.

- Kafka Server with Zookeeper (Full setup) runs on port number 9092

If you enjoyed this post, share it with your friends. Do you want to share more information about the topic discussed above or do you find anything incorrect? Let us know in the comments. Thank you!