➤ How to Code a Game

➤ Array Programs in Java

➤ Java Inline Thread Creation

➤ Java Custom Exception

➤ Hibernate vs JDBC

➤ Object Relational Mapping

➤ Check Oracle DB Size

➤ Check Oracle DB Version

➤ Generation of Computers

➤ XML Pros & Cons

➤ Git Analytics & Its Uses

➤ Top Skills for Cloud Professional

➤ How to Hire Best Candidates

➤ Scrum Master Roles & Work

➤ CyberSecurity in Python

➤ Protect from Cyber-Attack

➤ Solve App Development Challenges

➤ Top Chrome Extensions for Twitch Users

➤ Mistakes That Can Ruin Your Test Metric Program

Java Collection Interview Questions | Let us some important Java Collection interview questions that are most commonly asked. Also see:- Java 8 Interview Questions

Table of Contents

- Concurrent Collections Interview Questions

- Internal Working of HashMap and HashSet – After Java 8 Performance Improvement

- Differences between Array, Vector, ArrayList, and LinkedList

- Comparable & Comparator

- Coding Questions

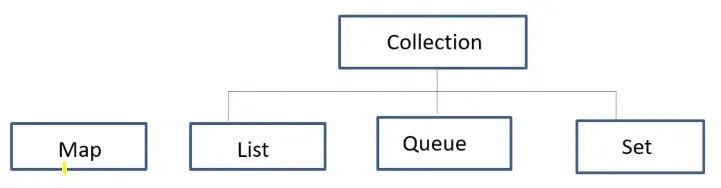

1. Explain Collection Hierarchy.

java.util.Collection is the root of the Java collection framework and most of the collections in Java are inherited from this interface except the Map interface.

java.util.List:

- It contains ordered elements

- May include duplicates

- Supports the index-based search, and random access.

- Elements can be easily inserted irrespective of the position.

java.util.Set:-

- Does not define an order for the elements hence index-based search is not supported.

- Does not contain duplicates

java.util.Queue:-

- It follows the FIFO approach

- Elements are added at the rear end and removed from the front end.

java.util.Map:-

- It represents key, value pair.

- It does not implement collection.

- It can only contain a unique key.

- It can have duplicate values.

List(I)

- Collection(I) 1.2v

- List(I) 1.2v

- ArrayList 1.2v

- LinkedList 1.2v

- Vector 1.0v

- Stack 1.0v

- List(I) 1.2v

ArrayList:-

- It has dynamic resizing – 50% of the original size. Whenever the ArrayList is full and we want to add more elements then it resizes to 50% of the original size.

- It is non-synchronized.

LinkedList:-

- It implements List and Deque interfaces.

- It maintains the insertion order.

- It is non-synchronized.

- It does not support accessing elements randomly.

- We can use ListIterator to iterate LinkedList elements.

Vector:-

- It is synchronized.

- It maintains the insertion order.

- It is thread-safe.

- It increases its size by doubling the array size. (100% increment)

- It is a legacy class.

Stack:-

- Last in First out (LIFO).

- The elements are added as well as removed from the rear end.

Set(I)

- Collection(I) 1.2v

- Set(I) 1.2v

- HashSet 1.2v

- LinkedHashSet 1.4v

- SortedSet(I) 1.2v

- NavigableSet(I) 1.6v

- TreeSet 1.2v

- NavigableSet(I) 1.6v

- HashSet 1.2v

- Set(I) 1.2v

HashSet:-

- It implicitly implements a hashtable.

- Only one null element can be added.

- It contains only unique elements.

- It is an unordered set.

LinkedHashSet:-

- It is an ordered version of HashSet that maintains a doubly linked List across all elements.

- It preserves the insertion order.

SortedSet:-

- duplicates are not allowed & all objects should be inserted according to some sorting order.

- All elements of a Sorted Set must implement the Comparable interface.

- (Default: It is a set sorted in ascending order.)

Tree Set:-

- Uses a Tree for storage (self-balancing binary search tree like Red-Black Tree).

- Objects in a TreeSet are stored in a sorted and ascending order.

- It doesn’t accept any null value.

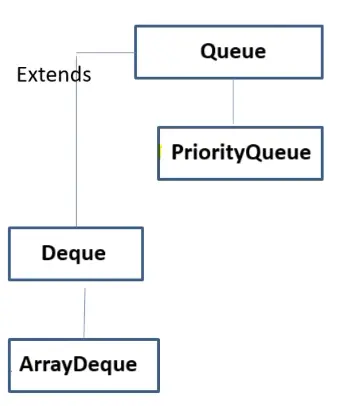

Queue(I)

- Collection(I) 1.2v

- Queue(I) 1.5v

- PriorityQueue 1.5v

- BlockingQueue 1.5v

- PriorityBlockingQueue 1.5v

- LinkedBlockingQueue 1.5v

- DeQue 1.5v

- ArrayDeque 1.5v

- Other classes 1.5v

- Queue(I) 1.5v

Here Queue and Deque are interfaces, whereas PriorityQueue and ArrayDeque are classes.

Priority Queue:-

- PriorityQueue is a queue with priority associated with each element.

- The High priority element is served before a low-priority element irrespective of their insertion order.

Deque(I):-

- It refers to a double-ended queue.

- Elements can be added and removed from either end.

ArrayDeque(C):-

- It is a way to apply a resizable array in addition to the implementation of the Deque interface.

- No capacity restricted.

Map(I)

- Map(I) 1.2v

- HashMap 1.2v

- LinkedHashMap 1.4v

- WeakHashMap 1.2v

- IdentityHashMap 1.4v

- Hashtable 1.0v

- Properties 1.0v

- SortedMap(I) 1.2v

- NavigableMap(I) 1.6v

- TreeMap 1.2v

- NavigableMap(I) 1.6v

- HashMap 1.2v

Hashtable implements Map(I) and extends Dictionary(AC) class. They are introduced in 1.0v therefore they are considered as legacy classes.

- Dictionary (AC) 1.0v

- Hashtable 1.0v

- Properties 1.0v

- Hashtable 1.0v

HashMap:-

- It is non-synchronized.

- It allows only one null key but multiple null values.

LinkedHashMap

- It preserves the insertion order of the element.

WeakHashMap

- Entries are automatically removed when their keys are no longer in ordinary use, preventing memory leaks.

- It allows null values and the null key

IdentityHashMap

- It uses reference equality (==) instead of object equality (equals()) for comparing keys.

HashTable:-

- It is synchronized in nature.

- It does not allow any null key or value.

Properties

- Represents a persistent set of properties that can be loaded from or saved to a stream.

- It is often used for configuration settings.

Sorted Map:-

- all objects should be inserted according to some sorting order. By default, Entries are maintained in ascending key order.

Tree Map:-

- Implicitly implements the Red-Black Tree implementation.

- Can not store any null key.

2. Why Map does not extend the Collection interface?

- The Map interface in Java follows a key-value pair structure whereas the Collection interface is a collection of objects stored in a structured manner with a specified access mechanism.

- The main reason Map does not extend the Collection interface is that the add(E e) method of the Collection interface does not support the key-value pair like the Map interface’s put(K, V) method.

- It might not extend the Collection interface but still is an integral part of the Java Collections framework.

public interface List<E> extends Collection<E> { }public interface Map<K, V> { }3. Internal working of HashMap.

- HashMap in Java works on the hashing principle where hash functions are used to link key and value in HashMap, Objects (Map.Entry -> contains both key and value object) are stored by calling the put(key, value) method of HashMap and retrieved by calling get(key) method.

- When we call the put() method, the hashcode() method of the key object is called which calculates an index of the bucket location where we can store the value object.

- To retrieve, you call the get() method and again pass the key object, which lands up at the same index or bucket and you retrieve the value object.

- If two different keys return the same hash index then a collision occurs. In this case, a linked list is formed at that bucket location and a new entry is stored as the next node.

- Now put() method will just append the object nodes in the linked list.

- But in the case of get(), if we have a linked list at that index then we need an extra check to search for the correct value, this is done by the equals() method. It checks every key of every node and if equals() returns true then Map returns that corresponding value from the linked list.

4. What will happen if we don’t override hashcode() and equals() method in our class? (Talk in terms of collection/Map).

If you don’t override hashCode and equals methods in your class, it can lead to issues when you use instances of that class in collections like HashSet, HashMap, or LinkedHashMap.

- HashSet: It relies on

hashCodeandequalsto determine the uniqueness of elements. Without proper overrides, duplicate elements might not be recognized as duplicates, leading to unexpected behavior. - HashMap: Similarly, it uses

hashCodefor locating the bucket andequalsfor key comparison. Without proper overrides, keys may not be retrieved correctly, leading to problems like duplicate keys or inability to fetch values.

In short, not overriding hashCode and equals can cause unpredictable behavior and bugs in your collections, compromising the integrity of your data operations.

5. How is LinkedList implemented in Java, is it a Singly or Doubly linked list?

LinkedList in Java is a doubly linked list.

6. When to use ArrayList and LinkedList?

LinkedLists are better to use for the update operations whereas ArrayLists are better to use for the search operations. Due to the RandomAccess interface, searching/fetching in the arraylist can be done in O(1).

7. What is the difference between HashMap, TreeMap, and LinkedHashMap?

LinkedHashMap preserves the insertion order, it does not sort.

| HashMap | TreeMap | LinkedHashMap | |

|---|---|---|---|

| Order | Random | Sorted order according to the natural ordering | Store according to the insertion order |

| Time complexity to search and insert | O(1) | O(log n) | O(1) |

| Null keys | Allowed | Not Allowed | Allowed |

| Data structure support | hash table, LinkedList, and Red-Black tree | Red-Black tree | hash table and doubly-linked list |

| Key requirement | equals() and hashcode() method need to be overwritten | Along with equals() and hashcode(), the Comparator(I) to implement | equals() and hashcode() method need to be overwritten |

| Usage | Normal processing, faster retrieval. ConcurrentHashMap can be used as per the need | Required when sorting and navigable features are needed as per the use case | Required when we need to maintain the insertion order |

8. What is a PriorityQueue in Java?

- A priority queue in Java is an abstract data type similar to a regular queue or stack data structure but has a special feature called priority associated with each element.

- In this queue, a high-priority element is served before a low-priority element irrespective of their insertion order. The PriorityQueue is based on the priority heap.

- The elements of the priority queue are ordered according to the natural ordering, or by a Comparator provided at queue construction time, depending on which constructor is used.

import java.util.PriorityQueue;

public class Test {

public static void main(String[] args) {

PriorityQueue<Integer> priorityQueue = new PriorityQueue<>();

priorityQueue.add(10);

priorityQueue.add(20);

priorityQueue.add(5);

priorityQueue.add(15);

System.out.println("PriorityQueue: " + priorityQueue);

System.out.println("Removed element: " + priorityQueue.poll());

System.out.println("PriorityQueue after removal: " + priorityQueue);

System.out.println("Head of the queue: " + priorityQueue.peek());

}

}Output:-

PriorityQueue: [5, 15, 10, 20]

Removed element: 5

PriorityQueue after removal: [10, 15, 20]

Head of the queue: 10

In Java’s PriorityQueue, the priority is determined by the natural ordering of the elements (like numerical or alphabetical order) or by a custom Comparator you provide. The element at the head is always the one with the highest priority according to this ordering. If you want a custom priority, you can use a Comparator:-

import java.util.Comparator;

import java.util.PriorityQueue;

public class Test {

public static void main(String[] args) {

// custom Comparator for descending order

Comparator<Integer> customComparator = (a, b) -> b - a;

PriorityQueue<Integer> priorityQueue = new PriorityQueue<>(customComparator);

priorityQueue.add(10);

priorityQueue.add(20);

priorityQueue.add(5);

priorityQueue.add(15);

System.out.println("PriorityQueue: " + priorityQueue);

System.out.println("Removed element: " + priorityQueue.poll());

System.out.println("PriorityQueue after removal: " + priorityQueue);

System.out.println("Head of the queue: " + priorityQueue.peek());

}

}Output:-

PriorityQueue: [20, 15, 5, 10]

Removed element: 20

PriorityQueue after removal: [15, 10, 5]

Head of the queue: 15

9. Can you use any class as a Map key?

No. Minimum requirement to use a Class as a Key:-

- The class must override the equals() method and the hashCode() method.

- The class should adhere to the rules associated with equals() and hashCode() for all instances.

- The class field that is not used in the equals() method should not be used in the hashCode() method as well.

- The best way to use a user-defined key class is by making it immutable. It helps in caching the hashCode() value for better performance. Also if the class is made immutable it will ensure that the hashCode() and equals() are not changing in the future.

- Java Wrapper classes (Integer, Long, Boolean, etc) and String already satisfy these conditions therefore generally they are used as a key.

10. How do you make the Java ArrayList read-only?

We can obtain a Java ArrayList that is Read-only by calling the Collections.unmodifiableCollection() method. When we define an ArrayList as Read-only, we cannot modify the collection through the add(), remove(), or set() methods.

import java.util.ArrayList;

import java.util.Collections;

import java.util.List;

public class Test {

public static void main(String[] args) {

List<String> list = new ArrayList<>();

list.add("One");

list.add("Two");

// make the ArrayList read-only

List<String> readOnlyList = Collections.unmodifiableList(list);

// try modifying the read-only list

try {

readOnlyList.add("Three");

} catch (UnsupportedOperationException e) {

System.out.println("Modification attempt failed: " + e);

}

System.out.println("Read-Only List: " + readOnlyList);

}

}Output:-

Modification attempt failed: java.lang.UnsupportedOperationException

Read-Only List: [One, Two]

The Collections class also contains unmodifiableSet(), unmodifiableMap(), unmodifiableCollection(), unmodifiableNavigableMap(), unmodifiableNavigableSet(), unmodifiableSortedMap(), and unmodifiableSortedSet() methods.

11. How to traverse or loop over a Map in Java?

Different ways:-

- Iterating or looping map using Java 5 for each loop

- Iterating Map in Java using KeySet Iterator

- Looping HashMap in Java using EntrySet and Java 5 for loop

- Iterating HashMap in Java using EntrySet and Java iterator

12. How to remove duplicates from ArrayList?

There are two ways to remove duplicates from the ArrayList:-

- Using HashSet: By using HashSet we can remove the duplicate element from the ArrayList, but it will not then preserve the insertion order.

- Using LinkedHashSet: We can also maintain the insertion order by using LinkedHashSet instead of HashSet.

The Process to remove duplicate elements from ArrayList using the LinkedHashSet:-

- Copy all the elements of ArrayList to LinkedHashSet.

- Empty the ArrayList using the clear() method, which will remove all the elements from the list.

- Now copy all the elements of LinkedHashset to ArrayList.

List<Integer> numbers = new ArrayList<>(Arrays.asList(100, 10, 50, 100, 50, 50));

Set<Integer> uniqueNumbers = new LinkedHashSet<>(numbers);

System.out.println(uniqueNumbers); // [100, 10, 50]

numbers.clear();

numbers = new ArrayList<>(uniqueNumbers);

System.out.println(numbers); // [100, 10, 50]Using Java 8:- Use distinct() method.

List<Integer> numbers = new ArrayList<>(Arrays.asList(100, 10, 50, 100, 50, 50));

List<Integer> uniqueNumbers = numbers.stream()

.distinct()

.collect(Collectors.toList());

System.out.println(uniqueNumbers); // [100, 10, 50]13. Different types of references?

In Java, we have three main types of references:-

- Strong References: Any object that has a strong reference pointing to it is not eligible for garbage collection. Example:-

Integer a = 1;- Soft References: An object that has a SoftReference pointing to it won’t be garbage collected until the JVM absolutely needs memory.

Integer a= 1;

SoftReference<Integer> soft = new SoftReference<Integer>(a);

a= null;The variable ‘a’ had a strong reference, next, we are wrapping a strong reference into a soft reference. After making that strong reference ‘a’ as null, the object is eligible for garbage collection but will be collected only when the JVM absolutely needs memory.

- Weak References: The objects that are referenced by weak references are garbage collected eagerly; the garbage collection won’t wait until it needs memory in that case.

Integer a= 1;

WeakReference<Integer> soft = new WeakReference<Integer>(a);

a= null;References of a WeakReference type are used as keys in WeakHashMap.

In the WeakHashMap, JVM periodically checks keys that have weak references. A weak reference signifies that the key is no longer used by anyone and is eligible to be collected by the garbage collector. Then WeakHashMap removes the associated entry from the map.

14. How does WeakHashMap differ from other HashMap?

WeakHashMap is a HashMap, with keys that are of a WeakReference type.

An entry in a WeakHashMap will automatically be removed when its key is no longer in ordinary use, meaning that there is no single Reference that points to that key. When the garbage collection (GC) process discards a key, its entry is effectively removed from the map, even though it is associated with WeakHashMap. i.e. Garbage Collector dominates over WeakHashMap.

In HashMap, even if the key does not have any reference that points to that key (is eligible for the GC) is in reality not eligible for garbage collection because it’s associated with the entry object added in a bucket of hashMap. This means that the HashMap has dominance over the garbage collector.

import java.util.HashMap;

import java.util.Map;

import java.util.WeakHashMap;

public class Test {

public static void main(String[] args) {

Map<Integer, String> hashMap = new HashMap<>();

Map<Integer, String> weakHashMap = new WeakHashMap<>();

// key object

Integer key1 = 1000;

Integer key2 = 2000;

hashMap.put(key1, "HashMapValue");

weakHashMap.put(key2, "WeakHashMapValue");

// display initial content

System.out.println("HashMap before GC: " + hashMap);

System.out.println("WeakHashMap before GC: " + weakHashMap);

// remove strong references

key1 = null;

key2 = null;

// Invoke garbage collection

System.gc();

System.out.println("===Called GC===");

// wait a bit for GC to happen

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

// display content after GC

System.out.println("HashMap after GC: " + hashMap);

System.out.println("WeakHashMap after GC: " + weakHashMap);

}

}Output:-

HashMap before GC: {1000=HashMapValue}

WeakHashMap before GC: {2000=WeakHashMapValue}

===Called GC===

HashMap after GC: {1000=HashMapValue}

WeakHashMap after GC: {}

15. What are the best practices related to the Java Collections Framework?

- Choosing the right type of collection based on the need, for example, if size is fixed, we might want to use Array over ArrayList.

- If we don’t want duplicates, we should use Set.

- If we want to preserve the insertion order then we should use LinkedList/LinkedHashSet/LinkedHashMap.

- Some collection classes allow us to specify the initial capacity, so if we have an estimate of the number of elements we will store, we can use it to avoid rehashing or resizing.

- Write programs in terms of interfaces, not implementations, it allows us to change the implementation easily at a later point in time.

- Always use Generics for type safety and avoid ClassCastException at runtime.

- Use immutable classes provided by JDK as keys in Map to avoid the implementation of hashCode() and equals() for our custom class.

16. What is the load factor in HashMap?

HashMap’s performance depends on two things first initial capacity and second load factor. Whenever we create HashMap initial capacity number of the bucket is created initially and the load factor is the criteria to decide when we have to increase the size of HashMap when it’s about to get full.

A load factor is a number that controls the resizing of HashMap when a number of elements in the HashMap cross the load factor as if the load factor is 0.75 and when becoming more than 75% full then resizing trigger which involves array copy.

17. How does resize happen in HashMap?

The resizing happens when the map becomes full or when the size of the map crosses the load factor. For example, if the load factor is 0.75 and then becomes more than 75% full, then resizing trigger, which involves an array copy. First, the size of the bucket is doubled, and then old entries are copied into a new bucket.

18. What is the difference between the capacity and size of HashMap in Java?

The capacity denotes how many entries HashMap can store, and size denotes how many mappings or key/value pair is currently present.

19. Does Set have a get(i) method or does Set allow index-based fetch?

No. Set in Java doesn’t have a get(i) method because sets are not ordered collections – they don’t maintain the insertion order, except for specific implementations like LinkedHashSet. To retrieve elements by index, you would use a List which maintains order and provides indexed access.

Sets ensure uniqueness, but if you need ordered and indexed access, a List is your friend.

20. Which sorting is used by Collections?

If elements are less than 7 then Insertion Sort is used otherwise Merge Sort is used.

Concurrent Collections Interview Questions

1. What is the need for concurrent collection?

- Traditional collections are not thread-safe. Only a few classes like Vector, and HashTable are thread-safe.

- Collections provide some methods like synchronizedList, synchronizedMap, and synchronizedSet which provide thread safety but the problem is they capture lock on the complete collection even for reading. That decreases performance.

- In the traditional collection, if one thread iterates and the other tries to modify structural change then ConcurrentModificationException is thrown.

// Example of ConcurrentModificationException in single thread

public class Test {

public static void main(String[] args) {

HashMap<Integer, Integer> map = new HashMap<>();

map.put(1, 1);

map.put(2, 2);

map.put(3, 3);

Iterator<Integer> iterator = map.keySet().iterator();

while (iterator.hasNext()) {

Integer key = iterator.next();

System.out.println(map.get(key));

if (key.equals(2)) {

map.put(4, 4);

}

}

}

}Output:-

1

2

Exception in thread “main” java.util.ConcurrentModificationException

If we run the same program using the ConcurrentHashMap class then it won’t throw any exception and 4 will be added to the map.

import java.util.Iterator;

import java.util.concurrent.ConcurrentHashMap;

public class Test {

public static void main(String[] args) {

ConcurrentHashMap<Integer, Integer> map = new ConcurrentHashMap<>();

map.put(1, 1);

map.put(2, 2);

map.put(3, 3);

Iterator<Integer> iterator = map.keySet().iterator();

while (iterator.hasNext()) {

Integer key = iterator.next();

System.out.println(map.get(key));

if (key.equals(2)) {

map.put(4, 4);

}

}

}

}Output:-

1

2

3

4

2. Difference between fail-fast and fail-safe iterators?

In Java, “fail-fast” and “fail-safe” are concepts related to how collections handle concurrent modification during iteration. Both approaches deal with the scenario where a collection is modified while it is being iterated over. However, they address this scenario differently:-

1. Fail-Fast:-

- In a fail-fast approach, if a collection is structurally modified while it is being iterated over (by any means other than the iterator’s own remove method), the iterator will throw a

ConcurrentModificationException. - The purpose of this behavior is to immediately detect and prevent concurrent modification, ensuring that the integrity of the collection is maintained.

- Fail-fast iterators are typically used in non-thread-safe collections like the collections provided by the

java.utilpackage, such asArrayList,HashMap, etc.

2. Fail-Safe:-

- In contrast, fail-safe iterators do not throw

ConcurrentModificationExceptioneven if the collection is modified during iteration. - Instead of operating directly on the underlying collection, fail-safe iterators operate on a clone of the collection’s data or on a copy of the data that was present at the time of iterator creation.

- This approach ensures that the iteration is not affected by modifications made to the collection after the iterator was created.

- Fail-safe iterators are typically used in concurrent collections like those provided by the

java.util.concurrentpackage, such asConcurrentHashMap,CopyOnWriteArrayList, etc.

Here’s a brief comparison:-

| Fail-Fast | Fail-Safe | |

| Behavior | Throws ConcurrentModificationException on concurrent modification during iteration | Iteration continues without exceptions and operates on a copy or snapshot of the collection present at the time of iterator creation. |

| Use Case | Non-thread-safe collections | Thread-safe or concurrent collections |

3. Example of Concurrent Collection classes?

Certainly! Here are some examples of thread-safe collections provided by Java’s java.util.concurrent package:-

- ConcurrentHashMap: This class provides a thread-safe implementation of the Map interface. It allows concurrent access to the map from multiple threads without the need for explicit synchronization. It achieves this through a combination of internal locking mechanisms and partitioning the map into segments.

- CopyOnWriteArrayList: This class implements a thread-safe variant of the List interface. It achieves thread safety by creating a new copy of the underlying array every time an element is added, modified, or removed. Iterators obtained from this collection will NOT throw

ConcurrentModificationException, but they operate on a snapshot of the list taken at the time the iterator was created. - CopyOnWriteArraySet: It provides safe concurrent access by creating a new copy of the underlying array with each modification (add, remove).

- ConcurrentLinkedQueue: This class implements a thread-safe variant of the Queue interface. It is based on a linked node structure and provides efficient non-blocking operations for adding and removing elements from the queue. Multiple threads can safely access and modify the queue concurrently.

- ConcurrentSkipListMap and ConcurrentSkipListSet: These classes provide thread-safe implementations of the SortedMap and SortedSet interfaces, respectively. They are based on skip lists, which allow for efficient concurrent access and modifications while maintaining sorted order.

These thread-safe collections are designed to be used in concurrent environments where multiple threads may access and modify the collection concurrently. They provide better performance and scalability compared to manually synchronized collections.

4. What do you understand by BlockingQueue?

The Java BlockingQueue interface, java.util.concurrent.BlockingQueue, represents a thread-safe queue to put elements into and take elements out of from. In other words, multiple threads can insert and take elements concurrently from a Java BlockingQueue, without any concurrency issues arising.

It is capable of blocking the threads that try to insert or take elements from the queue. For instance, if a thread tries to take an element and there are none left in the queue, the thread can be blocked until there is an element to take.

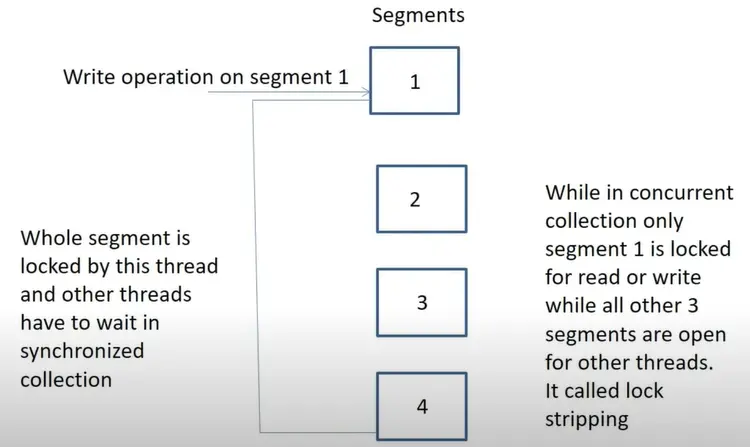

5. What is the difference between a synchronized collection and a concurrent collection?

- Both synchronized and concurrent collection classes provide thread safety.

- The difference between them comes in performance, scalability, and how they achieve thread safety.

- Synchronized collections like synchronized HashMap are much slower than their concurrent counterparts e.g. ConcurrentHashMap, the main reason for this slowness is locking.

One collection is divided into multiple segments.

6. Why performance of ConcurrentHashMap is better than HashTable and synchronizedMap?

In HashTable and synchronizedMap, the lock is acquired on the complete collection so only a single thread can capture the lock at a time while in ConcurrentHashMap, the lock is acquired on bucket level so at a time multiple threads can capture the lock at different buckets.

7. What is the concurrency level in ConcurrentHashMap?

The concurrency level defines how many threads are allowed to get locked. By default, ConcurrentHashMap has 16 buckets and the concurrency level also has 16 values. It means 16 threads can take a lock at a time each per bucket. We can change the concurrency level value, as well for example if we do 8 then all bucket counts are divided by 8, and result the number of buckets will keep a single lock.

8. Can multiple threads read from ConcurrentHashMap simultaneously?

Yes. The read operation doesn’t need any lock.

9. Can multiple threads write from ConcurrentHashMap simultaneously?

No. Write operation needs a lock.

10. Why null is not allowed in ConcurrentHashMap while allowed in hashmap?

if(concurrentHM.containsKeys(k)){

return concurrentHM.get(k);

}Suppose we have 2 threads t1 and 12. The t1 runs the if condition and checks if the key is present in ConcurrentHashMap, it goes to fetch value but in the meantime second thread t2 deletes the key from ConcurrentHashMap.

So the thread t1 gets the value as null. Now it thinks the value stored for key k is null or there is no key (whether the key explicitly maps to null vs the key isn’t mapped), but that’s not the case here. Hence to remove ambiguity, null is not allowed.

It’s not the case in HashMap as concurrent modification is not allowed hence deletion by one thread and read operation by another simultaneously is not possible in HashMap.

11. Internal working of ConcurrentHashMap?

As no concurrency level has been set explicitly, the ConcurrentHashMap gets divided into 16 segments. Each segment acts as an independent HashMap. During the write operation, the lock is obtained on this particular segment and not on the entire HashMap.

Hence it has multiple Segment level locks. It uses a Locking technique named ReentrantLock or lock stripping.

So 2 Threads operating on different segments can acquire lock on those segments without interfering with each other and can proceed simultaneously as they are working on separate Segment locks.

- Read/Get Operation:- Two Threads T1 and T2 can read data from the same or different segment of ConcurrentHashMap at the same time without blocking each other. Read operation does not require the lock of segments.

- Write/Put Operation:- Two Threads T1 and T2 can write data on different segments at the same time without blocking the other. Write operation requires the lock of that particular segment.

But Two threads can’t write data on the same segments at the same time. One has to wait for the other to complete the operation.

12. Can ConcurrentModificationException occurs only in a multithreaded environment?

No. ConcurrentModficationException can also occur in a single-threaded environment. On looping over a map using a for loop and trying to remove one element, you will get the ConcurrentModificatoinExcetpion. Because we broke the rule of not modifying a Collection during iteration.

public class Test {

public static void main(String[] args) {

HashMap<Integer, Integer> map = new HashMap<>();

map.put(1, 1);

map.put(2, 2);

map.put(3, 3);

Iterator<Integer> iterator = map.keySet().iterator();

while (iterator.hasNext()) {

Integer key = iterator.next();

System.out.println(map.get(key));

if (key.equals(2)) {

map.remove(key);

}

}

}

}How does Java know to throw ConcurrentModificationExeption? It uses a transient variable called modCount, which keeps track of how many times a list is modified structurally. Structural modifications are those that change the size of the list, which may affect the progress of iteration and may yield incorrect results.

Both Iterator and ListIterator use this field to detect unexpected changes. Other methods of List that structurally modify List also use this method e.g. add(), remove().

Cause: The real cause of ConcurrentModfiicationException is inconsistent modCount. When we are iterating over ArrayList then Iterator’s next() method keeps track of modCount. If we modify the collection by adding or removing elements then modCount will change and it will not match with the expected modCount, hence Iterator will throw ConcurrentModificationException.

Solution: Use Iterator if you are doing it on the single-threaded environment, otherwise use concurrent collection classes e.g. CopyOnWriteArrayList to remove elements while you are looping over it.

// list.remove(ele);

//itr.remove(); // right callpublic class Test {

public static void main(String[] args) {

List<Integer> list = new ArrayList<>();

list.add(1);

list.add(2);

list.add(3);

list.add(4);

list.add(5);

Iterator<Integer> iterator = list.iterator();

while (iterator.hasNext()) {

Integer value = iterator.next();

System.out.println(value);

if (value.equals(2)) {

// list.remove(value);

iterator.remove();

}

}

System.out.println("After removing");

Iterator<Integer> itAfterModification = list.iterator();

while (itAfterModification.hasNext()) {

Integer value = itAfterModification.next();

System.out.println(value);

}

}

}13. What is the mod count in ArrayList?

The modCount is the ArrayList variable that holds the modification count and every time we use add, remove, or trim methods, it gets changed. The expectedModCount is the iterator variable that is initialized when we create an iterator with the same value as modCount. This explains why we don’t get exceptions if we use a set method to replace any existing element.

So the iterator throws ConcurrentModificationException if the list size is changed. This modCount is used to initialize expectedModCount for that Iterator instance.

final void checkForComodification() {

if (modCount!= expectedModCount) {

throw new ConcurrentModificationException();

}

}14. Internal working of CopyOnWriteArrayList?

It is the thread-safe version of the List and is kept in the concurrent package. For every write operation, a separate clone copy is created and JVM will synchronize the clone and actual copy.

In ArrayList, we get java.util.concurrentModificationException as soon as the ArrayList is modified while reading. It happens because the ArrayList iterator is fail-fast by design. What it means is that once the iterator is created, if the ArrayList is modified, it throws ConcurrentModificationException.

15. What is the difference between HashMap and ConcurrentHashMap?

| HashMap | ConcurrentHashMap |

| HashMap is thread-unsafe. | ConcurrentHashMap is thread-safe. |

| Performance is high. | Performance is low. |

| HashMap can contain null key and value. | ConcurrentHashMap can not contain null as a key/value pair. |

| It is fail-fast, if one thread is iterating the map and another thread tries to do structural change then it throws CocurrentMofificationException. | ConcurrentHashMap is fail-safe, if one thread is iterating the map then another can update the same map. |

16. What is the difference between ArrayList and CopyOnWriteArrayList?

| ArrayList | CopyOnWriteArrayList |

| ArrayList is thread unsafe | CopyOnWriteArrayList is thread-safe |

| Performance is high | Performance is low |

| It is fail fast, if one thread is iterating ArrayList and the other thread tries to do structural change then it throws CocurrentMofificationException. | CopyOnWriteArrayList is fail-safe, if one thread is iterating List and the other tries to update List then the new clone will be created and later JVM will synchronize the clone and actual copy. |

| The iterator can remove the element. | If the Iterator tries to remove the element then the UnsupportedOperationException exception is thrown. |

import java.util.Iterator;

import java.util.concurrent.CopyOnWriteArrayList;

public class Test {

public static void main(String[] args) {

CopyOnWriteArrayList<Integer> list = new CopyOnWriteArrayList<>();

list.add(1);

list.add(2);

list.add(3);

list.add(4);

list.add(5);

Iterator<Integer> iterator = list.iterator();

while (iterator.hasNext()) {

Integer value = iterator.next();

System.out.println(value);

if (value.equals(2)) {

// list.remove(value);

iterator.remove();

}

}

}

}Output:-

1

2

Exception in thread “main” java.lang.UnsupportedOperationException

Internal Working of HashMap and HashSet – After Java 8 Performance Improvement

We need to cover 3 points for this question:

- How does It work on the principle of hashing?

- How does the put() method work Internally?

- How does the get() method work internally?

1. What is Hashing?

HashMap internally works on the principle of Hashing.

Hashing means using some function or algorithm to map object data to some integer value, the hashCode() method returns you that hash code. Hence it is necessary to write the hashCode() method properly for better performance of HashMap.

If we override the hashCode() method, it’s necessary to fulfill the Equals and Hashcode Contract.

2. What is put() method Internal working in HashMap?

- MAP is an object that maps keys to values.

- So, there must be some mechanism in HashMap to store this key-value pair.

- HashMap has a nested static class Entry, which looks like this:-

// Entry class

static class Entry<K, V> implements Map.Entry<K, V> {

final K key;

V value;

Entry<K, V> next;

final int hash;

// other methods

}- Everything in Hashmap is stored in a bucket internally (of the hash table – underlying data structure).

- Firstly hash value is calculated using the key’s hash code by calling its hashCode() method. This hash value is used to calculate the index in the array for storing the Entry object. JDK designers well assumed that there might be some poorly written hashCode() functions that can return very high or low hash code values. To solve this issue, they introduced another hash() function and passed the object’s hash code to this hash() function to bring a hash value in the range of array index size.

- With the Hash Code in place, we put the newly created Entry object K, V in the bucket of the Hash Table.

3. Internal Working of PUT method in case of collision in the old way (before Java 8) for HashMap, LinkedHashMap, and ConcurrentHashMap.

- So, in case of collision, Entry objects are stored in linked list form. When an Entry object needs to be stored in a particular index, HashMap checks whether there is already an entry? If there is no entry already present, the entry object is stored in this location.

- If there is already an object present on the calculated index, its next attribute is checked. If it is null, and current entry object becomes the next node in LinkedList. If the next variable is not null, the procedure is followed until the next is evaluated as null.

- What if we add another value object with the same key as entered before? Logically, it should replace the old value. How it is done? Well, after determining the index position of the Entry object, while iterating over linked on the calculated index, HashMap calls the equals() method on the key object for each entry object.

- All these entry objects in LinkedList will have similar hashcode but the equals() method will test for true equality. If key.equals(k) will be true then both keys are treated as the same key object. This will cause the replacement of the value object inside the entry object only.

- We know that two unequal objects can have the same hash code value. This is a case of collision.

- Hash collisions have a negative impact on the lookup time of HashMap. When multiple keys end up in the same bucket, then values along with their keys used to be placed in a linked list. In case of retrieval, the linked list has to be traversed to get the entry. In the worst-case scenario, when all keys are mapped to the same bucket, the lookup time of HashMap increases from O(1) to O(n).

Java 8 has come with the following improvements/changes of HashMap objects in case of high collisions.

- The alternative String hash function added in Java 7 has been removed.

- Buckets containing a large number of colliding keys will store their entries in a balanced tree instead of a linked list after a certain threshold is reached.

The above changes ensure the performance of O(log(n)) in worst-case scenarios (the hash function is not distributing keys properly) and O(1) with proper hashCode().

4. How Collisions are handled since Java 8 for HashMap, LinkedHashMap, and ConcurrentHashMap.

- Once the number of items in a hash bucket grows beyond a certain threshold, that bucket will switch from using a linked list of entries to a balanced tree. In the case of high hash collisions, this will improve worst-case performance from O(n) to O(log n).

- When a bucket becomes too big (currently: TREEIFY_THRESHOLD = 8), HashMap dynamically replaces it with a balanced tree. This way rather than having a pessimistic O(n) we get a much better O(log n).

- Bins (elements or nodes) of TreeNodes may be traversed and used like any others, but additionally support faster lookup when overpopulated. Tree bins (i.e., bins whose elements are all TreeNodes) are ordered primarily by hashCode, but in the case of ties, if two elements are of the same “class C implements Comparable<C>”, type then their compareTo() method is used for ordering.

- Because TreeNodes are about twice the size of regular nodes, we use them only when bins contain enough nodes. When they become too small (due to removal or resizing) they are converted back to plain bins (currently: UNTREEIFY_THRESHOLD = 6). In usages with well-distributed user hash codes, tree bins are rarely used.

- In Java 8, HashMap replaces the LinkedList with a binary tree when the number of elements in a bucket reaches a certain threshold (8). While converting the list to a binary tree, hashcode is used as a branching variable. If there are two different hashcodes in the same bucket, one is considered bigger and goes to the right of the tree, and the other one to the left. But when both the hashcodes are equal, HashMap assumes that the keys are comparable, and compares the key to determine the direction so that some order can be maintained. It is a good practice to make the keys of HashMap comparable.

- This JDK 8 change applies only to HashMap, LinkedHashMap, and ConcurrentHashMap.

5. How does the get() method work internally for HashMap, LinkedHashMap, and ConcurrentHashMap?

- We pass the key to get the method.

- It first calculates the hash(key).

- Then it checks the first node, Compares the Key with == or .equals() always, and returns it to increase the performance

- If not found on the first node then checks for 2 conditions:

- Is it an instance of the tree – (first instanceof TreeNode) if yes call getTreeNode method

- Is it still a linked list in case the threshold of linked list size is not reached and still we have entry objects in the linked list and not a balanced tree then traverse the linked list till we find our entry object, else return null if the key is not found.

do {

if (e.hash==hash && ((k = e.key) == key || (key != null && key.equals(k))))

return e;

} while ((e = e.next) != null);6. Important points to remember for HashMap, LinkedHashMap, and ConcurrentHashMap?

- The data structure to store entry objects is of type Entry.

- A particular index location in an array is referred to as a bucket because it can hold the first element of a LinkedList of entry objects.

- The key object’s hashCode() is required to calculate the index location of the Entry object.

- The key object’s equals() method is used to maintain the uniqueness of keys in the map.

- Value object’s hashCode() and equals() methods are not used in HashMap’s get() and put() methods.

- Hash code for null keys is always zero, and such entry object is always stored in zero index in Entry[ ].

7. Internal Working of HashSet?

- HashSet uses HashMap internally to store its objects. Whenever we create a HashSet object, one HashMap object associated with it is also created. This HashMap object is used to store the elements we enter in the HashSet. The elements we add into HashSet are stored as keys of this HashMap object. The value associated with those keys will be a constant.

- See the code to see PRESENT as an Object and Constructors who internally create MAP instances.

- The add() method of the HashSet class internally calls the put() method of backing the HashMap object by passing the element we have specified as a key and constant “PRESENT” as its value.

- The remove() method also works in the same manner.

map.remove(o)==PRESENTRemove() returns the previous value associated with the key, or null if there was no mapping for the key. So if the key is present, the value “PRESENT” will be returned which will be to “PRESENT” and hence return true else false if returns null.

Differences between Array, Vector, ArrayList, and LinkedList

1. Difference between Array and ArrayList

| Properties | Array | ArrayList |

| Static/Dynamic | The array is static in size. | ArrayList is dynamic in size. It can resize itself when needed. |

| Resizable | The array is a fixed-length data structure. | ArrayList is a variable length Collection class. |

| Primitive/Generic type | An array can store both objects and primitive types. | We cannot store primitive types in ArrayList. It automatically converts primitive type to wrapper class object. |

| Performance | It performs fast in comparison to ArrayList because of its fixed size. | resize() operation: Automatic resize will slow down the performance as it will use the temporary array to copy elements from the old array to a new array (by 50%).add() or get() operation: almost the same performance, as for ArrayList object these operations run in constant time. |

| Initialization | It is mandatory to provide the size of an array while initializing it directly or indirectly. | We can create an instance of ArrayList without specifying its size. Java creates an ArrayList of a default size of 10. |

| Iterating Values | We use for loop or for each loop to iterate over an array. | Apart from the for loop, and for each loop, we can also use an iterator to iterate over ArrayList. |

| Fetch Length | Array provides a length variable that denotes the length of an array. | ArrayList provides the size() method to determine the size of ArrayList. |

| Single or Multi-Dimensional | The array can be multi-dimensional. | ArrayList is always single-dimensional. |

| Storage in contiguous memory locations | The elements are contained in adjacent memory locations. | The objects are incapable of being contained in contiguous locations. |

| Insertion and storage of elements | The assignment operator is used for the storage of elements. | The add() method is utilized for the insertion of elements in an ArrayList. |

| Generics | Generics are not compatible with Arrays. | ArrayLists allow the use of Generics. |

2. Difference between ArrayList and Vector

| Properties | ArrayList | Vector |

| Synchronized | ArrayList is not synchronized. We prefer ArrayList over Vector because ArrayList can be synchronized explicitly using the Collections.synchronizedList() method. | Vector is synchronized. |

| Thread Safe | Not Thread Safe | Thread Safe |

| Performance | ArrayList is fast because it is non-synchronized. | Vector is slow because it is synchronized. |

| Legacy | ArrayList is not a legacy class. It is introduced in JDK 1.2 | Vector is a legacy class. |

| Size increment if the number of elements exceeds its capacity. | ArrayList increments 50% of the current array size. | Vector increments of 100% mean double the current array size. |

| Iterating Values | ArrayList uses the Iterator interface to traverse the elements. | A vector uses the Iterator interface or Enumeration interface to traverse the elements. |

3. Difference between ArrayList and LinkedList

| Properties | ArrayList | LinkedList |

| Internal Implementation | ArrayList internally uses a dynamic array to store its elements. | LinkedList uses a Doubly Linked List to store its elements. |

| Implementation | ArrayList implements only List. | LinkedList implements List as well as Queue. It can act as a queue as well. |

| Manipulation/add/delete operation | Manipulation with ArrayList is slow because it internally uses an array. If any element is removed from the array, all the other elements are shifted in memory. | Manipulation with LinkedList is faster than ArrayList because it uses a doubly linked list, so bit shifting is not required in memory. |

| Access/Get Operation | ArrayList is faster in storing and accessing data as internally Array is used which is random access. | LinkedList is slower than ArrayList in storing and accessing data as access requires node by node traversal. |

| Reverse Iterator | There is no descendingIterator() in ArrayList | LinkedList can be iterated in the reverse direction using descendingIterator() |

| Initial Capacity | If the constructor is not overloaded, then ArrayList creates an empty list of initial capacity 10 | There is no default capacity concept in the case of a LinkedList. Hence an empty list is created when a LinkedList is initialized. |

| Memory Overhead | In ArrayList Memory overhead is less as each index only holds the actual object(data). | Memory overhead in LinkedList is more as compared to ArrayList as a node in LinkedList needs to maintain the addresses of the next and previous nodes. |

If our application has many retrievals/access then manipulation in that case ArrayList is better else use LinkedList.

Conclusion: LinkedList element deletion and addition is faster compared to ArrayList.

Reason: LinkedList’s each element maintains two pointers (addresses) which point to both neighbor elements in the list. Hence removal only requires a change in the pointer location in the two neighbor nodes (elements) of the node that is going to be removed. In ArrayList, all the elements need to be shifted to fill out the space created by the removed element.

Hence if there is a requirement of frequent addition and deletion in the application then LinkedList is the best choice and if more search/fetch operation is required the ArrayList would be the best bet.

Comparable & Comparator

1. Why are Comparable and Comparator interfaces required in Java?

Java can not sort the custom objects like the way it sorts the array of primitive objects. For example, if we want to sort the array of integers then we can simply call Arrays.sort(array) method.

int[] array = { 10, 5, 9, 1 };

Arrays.sort(array);

System.out.println(Arrays.toString(array));Assume we have an Employee class and we tried sorting of array of employees then JVM will throw:- “ClassCastException: Employee cannot be cast to java.lang.Comparable”.

To resolve this error, the Employee class should implement the Comparable interface and provide an implementation of the compareTo() method in the Employee class.

public class Employee implements Comparable<Employee> {

private int id;

private String name;

// constructor, getter, setter, toString

@Override

public int compareTo(Employee o) {

return this.id - o.id;

}

}Employee[] empArr = new Employee[4];

empArr[0] = new Employee(5, "John");

empArr[1] = new Employee(9, "Paul");

empArr[2] = new Employee(1, "Amit");

empArr[3] = new Employee(10, "Ame");

Arrays.sort(empArr);

System.out.println(Arrays.toString(empArr));Output:-

[

Employee [id=1, name=Amit],

Employee [id=5, name=John],

Employee [id=9, name=Paul],

Employee [id=10, name=Ame]

]

Now, the Employee class has default sorting based on ID. But we want to sort it based on the employee name, instead of ID without changing the default behavior. In that case, the Comparator interface is useful.

public class Employee implements Comparable<Employee> {

private int id;

private String name;

// constructor

// getter and setter

// toString

@Override

public int compareTo(Employee o) {

return this.id - o.id;

}

public static Comparator<Employee> nameComparator = new Comparator<Employee>() {

@Override

public int compare(Employee e1, Employee e2) {

return e1.getName().compareTo(e2.getName());

}

};

}// Sort based on name ascending

Arrays.sort(empArr, Employee.nameComparator);

System.out.println(Arrays.toString(empArr));[ Employee [id=10, name=Ame], Employee [id=1, name=Amit], Employee [id=5, name=John], Employee [id=9, name=Paul] ]

Arrays.sort(empArr); // sort based on ID

Arrays.sort(empArr, Employee.nameComparator); // sort based on NameThe same can be done in Java8 without creating an explicit nameComparator in the Employee class:-

// Sorting by name using lambda expression

Arrays.sort(empArr, Comparator.comparing(Employee::getName));

// or

// Arrays.sort(empArr, Comparator.comparing(e -> e.getName()));To sort in descending order of the name:-

// Sorting in descending order of name

Arrays.sort(empArr, Comparator.comparing(Employee::getName).reversed());

// or

// Arrays.sort(empArr, Comparator.comparing(e -> ((Employee) e).getName()).reversed());2. Difference between Comparable and Comparator interfaces?

- Comparable does not need to be passed in the method argument whereas Comparator needs to be passed as the method argument.

- Comparable belongs to

java.langpackage whereas Comparator belongs tojava.utilpackage. - Comparable will provide the natural sorting order whereas Comparator can provide multiple ways of sorting.

- To use a Comparable interface, the source code of the entity class needs to be changed. The Entity class should implement a Comparable interface whereas to use a Comparator interface change in the source code of the entity class is not required, we can create a separate class implementing a Comparable interface, and use that.

public class Sample implements Comparator<Employee> {

@Override

public int compare(Employee e1, Employee e2) {

return e1.getName().compareTo(e2.getName());

}

}public class Test {

public static void main(String[] args) {

Employee[] empArr = new Employee[4];

empArr[0] = new Employee(5, "John");

//....

Sample comparator = new Sample();

Arrays.sort(empArr, comparator);

System.out.println(Arrays.toString(empArr));

}

}3. Write different Comparators in Java to sort employees based on name, age, and Address.

In order to sort Employee objects on different criteria, we need to create multiple comparators e.g. NameComparator, AgeComparator, and AddressComparator, this is known as custom sorting in Java.

This is different from the natural ordering of objects, provided by the compareTo() method of java.lang.Comparable interface.

Compare method returns

- Negative if 1st parameter is < 2nd parameter

- Positive if 1st parameter is > 2nd parameter

- 0 if 1st parameter is = 2nd parameter

Ordering objects of user-defined classes is done using a comparator interface. A comparator object can compare two objects that belong to the same class. Compare obj1 and obj2 using the following function.

A user-defined class’s objects are ordered using the Java Comparator interface.

The compare(Object obj1, Object obj2) and equals methods are both included in the java.util package (Object element).

It supports different sorting sequences, which means you can order the items by any data member, such as rollno, name, age, or anything else.

public class Address {

private String streetName;

private Integer pincode;

// constructor, tostring, getter and setters

}public class Employee {

private Integer id;

private Integer age;

private String name;

private Address address;

// constructor, tostring, getter and setters

}import java.util.Arrays;

import java.util.List;

import java.util.stream.Collectors;

public class Test {

public static void main(String[] args) {

Employee emp1 = new Employee(1, 29, "Tom", new Address("Street1", 456));

Employee emp2 = new Employee(2, 28, "Jerry", new Address("Street2", 344));

Employee emp3 = new Employee(3, 30, "Rocco", new Address("Street3", 12));

Employee emp4 = new Employee(4, 18, "Patil", new Address("Street4", 567));

Employee emp5 = new Employee(5, 22, "Patel", new Address("Street5", 678));

List<Employee> employees = Arrays.asList(emp1, emp2, emp3, emp4, emp5);

System.out.println(employees);

// sort by age

List<Employee> employeesSortedByAge = employees.stream()

.sorted((e1, e2) -> e1.getAge() - e2.getAge())

.collect(Collectors.toList());

System.out.println(employeesSortedByAge);

// sort by name

List<Employee> employeeSortedByName = employees.stream()

.sorted((e1, e2) -> e1.getName().compareTo(e2.getName()))

.collect(Collectors.toList());

System.out.println(employeeSortedByName);

// sort by pincode of address

List<Employee> employeesSortedByAddressPincode = employees.stream()

.sorted((e1, e2) -> Integer.compare(e1.getAddress().getPincode(),

e2.getAddress().getPincode())

).collect(Collectors.toList());

System.out.println(employeesSortedByAddressPincode);

}

}Coding Questions

Two Sum with Hash Map

Given two inputs first an array of integers “nums” and an integer “target”, return indices of the two numbers such that they add up to the target.

You may assume that each input will have exactly one solution, and you may not use the same element twice. You can return the answer in any order.

Example 1:

Input: nums [2,7,11,15], target = 9

Output: [0,1]

Output: Because nums[0] + nums[1] == 9, we return [0, 1].

Example 2:

Input: nums = [3,2,4], target = 6

Output: [1,2]

Example 3:

Input: nums [3,3], target = 6

Output: [0,1]

import java.util.Arrays;

import java.util.HashMap;

import java.util.Map;

public class Test {

public static void main(String[] args) {

int[] nums = { 2, 7, 11, 15 };

int target = 13;

Test test = new Test();

System.out.println(Arrays.toString(test.twoSum(nums, target)));

}

public int[] twoSum(int[] nums, int target) {

Map<Integer, Integer> input = new HashMap<>();

for (int i = 0; i < nums.length; i++) {

int secondNumber = target - nums[i];

if (input.containsKey(secondNumber)) {

return new int[] { input.get(secondNumber), i };

} else {

input.put(nums[i], i);

}

}

return new int[] { -1, -1 };

}

}Using Java8 Stream:-

private static int[] m1(int[] nums, int target) {

Map<Integer, Integer> input = new HashMap<>();

return IntStream.rangeClosed(0, nums.length - 1).filter(i -> {

int secondElement = target - nums[i];

if (input.containsKey(secondElement)) {

return true;

} else {

input.put(nums[i], i);

return false;

}

})

.mapToObj(i -> new int[] { input.get(target - nums[i]), i })

.findFirst()

.orElse(new int[] { -1, -1 });

}Check Similar Problems:- Two Pointer Approach

Pair of Elements in an Array

How to find all pairs of elements in an array whose sum is equal to a given number?

Given an array of integers, and a number ‘sum’, find the number of pairs of integers in the array whose sum is equal to ‘sum’. Example:-

Input: arr[ ]= {1, 5, 7, -1}, sum = 6

Output: 2

Explanation: Pairs with sum 6 are (1, 5) and (7, -1)

public static void main(String[] args) {

int[] arr = { 1, 5, 7, -1 };

int sum = 6;

List<int[]> pairs = getPairsCount(arr, sum);

pairs.forEach(n -> System.out.println(Arrays.toString(n)));

}

private List<int[]> getPairsCount(int[] arr, int sum) {

List<Integer> hm = new ArrayList<>();

List<int[]> pairs = new ArrayList<>();

Arrays.stream(arr).forEach(n -> {

if (hm.contains(sum - n)) {

pairs.add(new int[] { n, sum - n });

}

hm.add(n);

});

return pairs;

}If you enjoyed this post, share it with your friends. Do you want to share more information about the topic discussed above or do you find anything incorrect? Let us know in the comments. Thank you!