➤ How to Code a Game

➤ Array Programs in Java

➤ Java Inline Thread Creation

➤ Java Custom Exception

➤ Hibernate vs JDBC

➤ Object Relational Mapping

➤ Check Oracle DB Size

➤ Check Oracle DB Version

➤ Generation of Computers

➤ XML Pros & Cons

➤ Git Analytics & Its Uses

➤ Top Skills for Cloud Professional

➤ How to Hire Best Candidates

➤ Scrum Master Roles & Work

➤ CyberSecurity in Python

➤ Protect from Cyber-Attack

➤ Solve App Development Challenges

➤ Top Chrome Extensions for Twitch Users

➤ Mistakes That Can Ruin Your Test Metric Program

Spring Boot Batch API Introduction | Batch API: Processing Large data sets and transferring from source to destination. Source/Destination can be Database, FileSystem. Example:-

- Job#1 MySQL Database to CSV(Excel) File.

- Job#2 CSV to MongoDB Import.

Also see:-

- CSV To MySQL Database Spring Boot Batch API Example

- CSV to MongoDB Spring Boot Batch Example

- MySQL to CSV Spring Boot Batch Example

- MongoDB to CSV Spring Boot Batch Example

- MySQL to XML Spring Boot Batch Example

What is the difference between Spring JDBC Batch API and Spring Boot batch API?

- JDBC Batch API executes multiple Insert/Update SQLs simultaneously using one network call. Example: 5 Students insert using one n/w call.

- Spring Boot batch API transfer data/process data. It reads from the Source, processes them, and writes to the destination. Examples: Data Export, Database Migration, One database to another database, report generation.

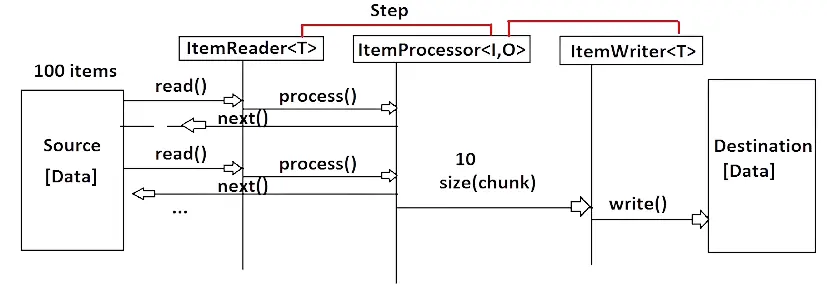

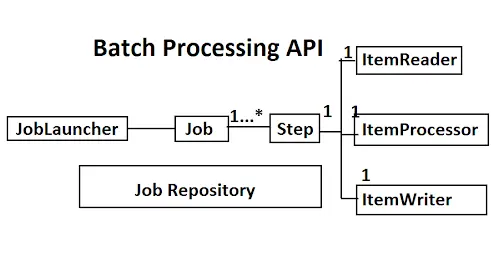

Batch Processing API Details

- JOB: A complete Batch Processing Task is called a Job.

- STEP: Every JOB contains at least one STEP and at most n-STEPS. STEP is a PART of JOB. Every STEP contains the following three:-

- Reader/Item Reader: It will read data from the source and give data to the processor.

- Processor/Item Processor: It will process (calculate, logic checking..etc) data given by the Reader.

- Writer/Item Writer: Write data to destination, data given by processor.

- JobLauncher: It will start executing of Job.

- Job Repository: It is a memory that holds executed Job Details (status, start and end time, … etc).

1 Step = 1 Reader + 1 Processor + 1 Writer

- Reader and Processor executed once per record/item.

- The writer executed based on a chunk(size) given by the programmer. For example:- chunk=10, then for every 10 items the one-time writer is executed. Chunk is the maximum limit for one network call.

For example if source has 100 items and chunk size = 50 then:-

- The reader executes 100 times.

- The processor executes 100 times.

- The writer executes 2 times (100 / 50 = 2).

Another example if source has 100 items and chunk size = 30 then:-

- The reader executes 100 times.

- The processor executes 100 times.

- The writer executes 4 times (100 / 30 = 4). It will be executed for 1 time for 1-30 items, 31-60 items, 61-90 items, and 91-100 items.

Reader GenericType (ex: String) and Processor Input Type (ex: String) must match because reader output will become processor input. Processor Output Type (ex: Employee) and Writer Generic Type (ex: Employee) must match because processor output will become writer input.

To create one step we need:-

- Step Name

- Reader object

- Processor Object

- Writer Object

Dependency for Spring Batch API

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-batch</artifactId>

</dependency>The org.springframework.batch.item package contains these functional interfaces:-

- ItemReader:-

T read() throws Exception, UnexpectedInputException, ParseException, NonTransientResourceException; - ItemProcessor:-

O process(@NonNull I item) throws Exception - ItemWriter:-

void write(@NonNull Chunk chunk) throws Exception

All above interfaces are given by Spring Boot batch API inside package org.springframework.batch.item. Spring Batch API has already provided Implementation classes for ItemReader and ItemWriter interfaces. Example: FlatFileItemReader, JdbcCursorItemReader, JpaItemWriter, MongoItemWriter, and etc.

But process logic depends on concept/data that we are working. So, we need to define this. Therefore ItemProcessor implementation classes are not given and we have to develop them.

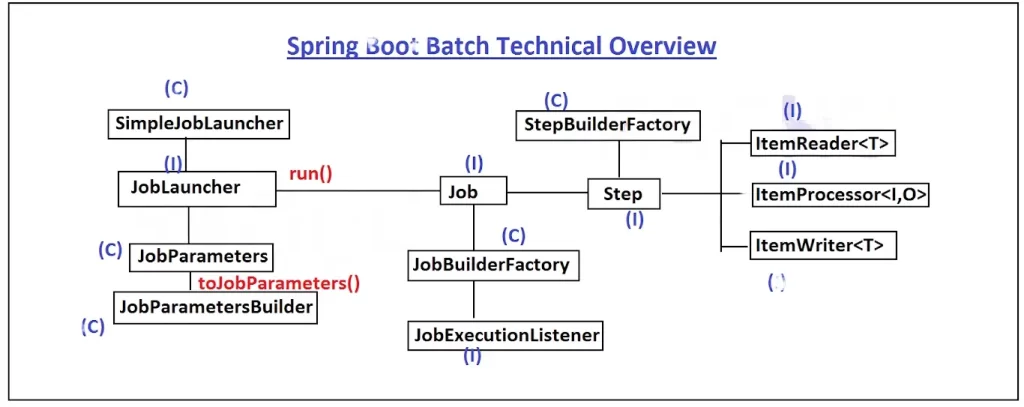

Spring Boot Technical View of Batch API

ItemReader<T>(I): It will read data from the source (Database, FileSystem), for this implementation class is given by Spring batch API only. we need not have to write any new implementation class. Just configure the existing class.ItemProcessor<I,O>(I): For this, we should define our own implementation class with our logic.ItemWriter<T>(I): It will write data to destination(DB, File). For this implementation class is given by Spring batch API only. we need not to write any new implementation class. Just configure the existing class.Step(I): A Step a collection of name + chunk + Reader Obj + Processor Object + writer objectStepBuilderFactory(C): Step is an Interface that is created using StepBuilderFactory(C). This class object is auto-configured by Batch API. So, directly use it (Autowired it).Job: It is a collection of Steps name + step caller(Incrementor) + listener object (optional) + step objects in orderJobBuilderFactory(C): This class is used to create the Job object. This class object is auto-configured by Batch API. So, directly use it (Autowired it).JobExecutionListener(I):- This is optional. To execute any logic (fine time, batch status.. etc) before starting and after finishing job processing. We should define Impl class for this.JobLauncher(I)and Impl classSimpleJobLauncher(C): This is used to call/execute Job objects. Spring batch API has provided implementation class and Auto-Configured, directly autowired this.JobParameters(C)created usingJobParametersBuilder(C): To pass any input to Job (key=val, ex: clientId, AppVersion, DB Location … etc) then we can use it. It can be empty also.

Generally in Spring framework using the properties file is not a must. But in Spring Boot almost all config inputs are given using properties only. Batch API was designed using Spring. So, JobParameters is added, now after Spring Boot, it is used very little, we can pass property directly using the properties file. But providing info of JobParameters while running the JobLauncher is compulsory.

BatchStatus is an enum having:-

- COMPLETED: Successfully done

- STARTING: About to Start Batch Processing

- STARTED: Job Runner is called

- STOPPING: About to finish the last step

- STOPPED: Job Runner completed

- FAILED: Exception in Batch process

- ABANDONED: Job Execution stopped because of some problems in server/force stop of server/DB not responding.

- UNKNOWN: Unable to find the problem, check log files.

ItemReader & ItemWriter Implementation Classes

Following implementation classes of ItemReader and ItemWriter are used with the following combination. See more:- https://docs.spring.io/spring-batch/reference/

CSV:-

- FlatFileItemReader

- FlatFileItemWriter

JDBC:-

- JdbcBatchItemWriter

- JdbcCursorItemReader

MongoDB:-

- MongoItemReader

- MongoItemWriter

JPA:-

- JpaPagingItemReader

- JpaItemWriter

XML:-

- StaxEventItemReader

- StaxEventItemReader

JSON:-

- JsonItemReader

- JsonFileItemWriter

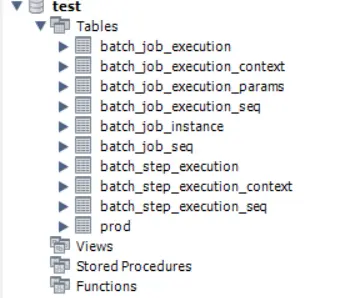

JobRepository

Repository means memory, here tables are created for storing details of executed batches.

on application startup Spring Batch will create the following additional tables:- batch_job_execution, batch_job_execution_context, batch_job_execution_params, batch_job_execution_seq, batch_job_instance, batch_job_seq, batch_step_execution, batch_step_execution_context, batch_step_execution_seq

To avoid these tables creation, we can use the H2 Database as a Repository:-spring.batch.initialize-schema=embedded# (default value is embedded)

If we don’t want to store these details in the temporary database also then:-spring.batch.initialize-schema=never

Or, we can use external databases such as MySQL, or Oracle and create tables:-spring.batch.initialize-schema=always

The initialize-schema internally following one Enum DataSourceInitializationMode:-

DataSourceInitializationMode {

ALWAYS, EMBEDDED, NEVER

}Sample Queries

- Fetch instance ID using job name:-

SELECT JOB_INSTANCE_ID FROM batch_job_instance WHERE JOB_NAME='csv-job';- Fetch Date and Time + Status data using Instance Id:-

SELECT START_TIME, END_TIME, STATUS FROM batch_job_execution

WHERE JOB_INSTANCE_ID IN (

SELECT JOB_INSTANCE_ID FROM batch_job_instance

WHERE JOB_NAME='csv-job'

);- Fetch Job Execution ID using Instance ID:-

SELECT JOB_EXECUTION_ID FROM batch_job_execution

WHERE JOB_INSTANCE_ID IN (

SELECT JOB_INSTANCE_ID FROM batch_job_instance

WHERE JOB_NAME='csv-job'

);- Fetch Step details using Job Execution:-

SELECT STEP_NAME, START_TIME, END_TIME, STATUS

FROM batch_step_execution

WHERE JOB_EXECUTION_ID IN(

SELECT JOB_EXECUTION_ID FROM batch_job_execution

WHERE JOB_INSTANCE_ID IN (

SELECT JOB_INSTANCE_ID FROM batch_job_instance

WHERE JOB_NAME='csv-job'

)

);Key spring.batch.job.enabled default indicates execute jobs on application startup once. To avoid batch processing on app startup provide value as false because we wrote Controller/Runner class for JobLaucnhing. The default value for spring.batch.job.enabled default = true

spring.batch.job.enabled=falseScheduling the Batch

To schedule the Batch we can use the scheduler concept. Steps to add batch with scheduler:-

- At Starter add @EnableScheduling

- Create a class to execute Batch:-

package com.knowprogram.demo.controller;

import org.springframework.batch.core.Job;

import org.springframework.batch.core.JobParameters;

import org.springframework.batch.core.JobParametersBuilder;

import org.springframework.batch.core.JobParametersInvalidException;

import org.springframework.batch.core.launch.JobLauncher;

import org.springframework.batch.core.repository.JobExecutionAlreadyRunningException;

import org.springframework.batch.core.repository.JobInstanceAlreadyCompleteException;

import org.springframework.batch.core.repository.JobRestartException;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.scheduling.annotation.Scheduled;

import org.springframework.stereotype.Component;

@Component

public class ProductBatch {

@Autowired

private JobLauncher jobLauncher;

@Autowired

private Job job;

@Scheduled(cron = "0 0 13 * * *")

public void execute() throws JobExecutionAlreadyRunningException,

JobRestartException,

JobInstanceAlreadyCompleteException,

JobParametersInvalidException {

JobParameters params = new JobParametersBuilder()

.addLong("time", System.currentTimeMillis())

.toJobParameters();

jobLauncher.run(job, params);

}

}We can pass the cron value as an expression:-@Scheduled(cron = "${my.input.data}")

In application.properties:-my.input.data=0 0 13 * * *

If you enjoyed this post, share it with your friends. Do you want to share more information about the topic discussed above or do you find anything incorrect? Let us know in the comments. Thank you!